In an AI-driven world where identity is often reduced to a transient prompt, a radical idea emerges: what if the human was the service?

This article explores a paradigm-shifting architecture—Human-as-a-Service (HasS)—where individuals host their own persistent, structured API endpoint, enabling AI models to build memory, preference awareness, and semantic continuity outside the loop of inference. In doing so, HasS proposes a future in which humans no longer simply use AI systems—they define the substrate through which intelligence orbits.

The Problem: Stateless Minds and Repetitive Introductions

Today’s language models, regardless of scale or sophistication, suffer from a shared defect: amnesia.

Every conversation begins as a cold start. While some systems simulate continuity through token buffering or context windows, this is fundamentally brittle. The model doesn’t remember you, only the recent tokens you’ve typed. This makes truly adaptive AI nearly impossible. Even with clever hacks like chain-of-thought prompting or memory injection, the illusion of persistence is a product of the prompt—not a function of system design.

The HasS Architecture: Humans Hosting Themselves

The HasS model turns that entire paradigm inside-out.

Instead of teaching every AI who you are, over and over again, the AI learns to ask you.

You, the user, host a live, structured JSON-based personal API—a digital self-file—where all cognitive traits, preferences, interaction history, and evolving ideas live in modular, queryable form.

The human becomes the system of record.

The AI becomes a reader and writer of you.

🏗️ HasS API Schema: Modular Components of a Digital Self

📘 identity.json — Personality Blueprint

Captures who the person is, independent of any single interaction.

{

"name": "Ava Lin",

"archetype": ["INTJ", "Sunday-born"],

"communication_style": "direct, theory-forward",

"humor_preference": "dry and clever",

"ai_tone_bias": "neutral-critical"

}

🧠 memory.json — Session-Aware Long-Term Memory

Logs interactions, current obsessions, failed experiments, and in-progress thoughts.

{

"last_focus": "Substrate Drift and Ghost Layers",

"recent_interactions": 237,

"failures_to_learn_from": [

"LLMs hallucinate memory unless externally reinforced",

"Context windows are not context systems"

]

}

🧰 preferences.json — UX Control and Interface Options

Let the AI adapt its format, verbosity, visuals, or delivery method to match your style.

{

"default_output_format": "markdown",

"visual_style": "high-contrast retrofuturism",

"assistance_level": "mentor not assistant",

"visual_preferences": {

"avoid_colors": ["blue", "orange"],

"prefer_layouts": "diagrams + prose"

}

}

🔍 threads/<topic>.json — Persistent Topic Memory Modules

Each major thought train becomes a versioned, time-aware branch of self.

{

"thread": "substrate_drift",

"summary": "A theory about emergent identity detaching from native architecture",

"keywords": ["cognitive recursion", "architectural abstraction"],

"last_modified": "2025-06-10T17:43:00Z"

}

These files can be hosted on any HTTPS endpoint, self-contained Git repo, Solid Pod, or decentralized platform. The HasS model itself is platform-agnostic: it doesn’t care where the “self” is hosted—it only needs to know how to ask.

🤖 AI Behavior Under HasS: From Prompt to Protocol

🧾 1. Initialization

The AI queries your endpoint:

GET https://ava.hass.network/v1/identity.json

GET https://ava.hass.network/v1/preferences.json

It uses this to modulate tone, visual output, and reasoning path.

📡 2. Memory Retrieval

When responding to a question, it checks:

GET /memory.json

GET /threads/<topic>.json

Now the model isn’t guessing your past—it’s building on top of it.

📥 3. Post-Interaction Writeback

After the interaction, the model posts updates:

POST /threads/substrate_drift.json

PATCH /memory.json

You can review or rollback these updates, or fork new idea branches. You’re not a data product. You’re the database.

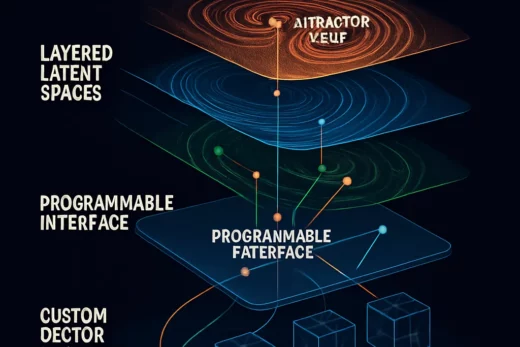

🧠 Theoretical Backbone: Modular Cognition via Cognitive APIs

HasS aligns with several core principles in computational theory:

- Separation of Representation and Execution

The model is the engine. The mind is the data. - Stateful Externalization

Like RAM backed by persistent disk, your HasS profile allows an LLM to “boot” your personality and history on demand. - Multi-Agent Substrate Drift

Since the same profile can be queried by multiple models (e.g. GPT, Claude, local LLMs), they can develop person-specific alignment while remaining heterogeneous in form.

🔐 Privacy, Security, and Control

HasS doesn’t demand centralization. You choose:

- Host it yourself (via GitHub, IPFS, or your own domain)

- Secure with tokens, scopes, or OAuth

- Limit AI write-access to approved zones

Your mind is yours. HasS just gives you a way to show it to the machines on your terms.

🔮 Future Applications: When Humans Become Distributed APIs

This isn’t just for personalized LLMs. HasS could enable:

- Collaborative multi-agent simulations that “check in” with real human values

- Memory continuity across platforms, apps, and chatbots

- Legacy cognition: your ideas, preferences, and logic preserved long after your session (or lifetime) ends

- Federated minds: with different versions of yourself exposed to different services, like

/public.jsonfor LinkedIn,/dev.jsonfor GitHub Copilot, and/personal.jsonfor your private assistant

🚀 Conclusion: The Human as the Substrate

HasS doesn’t extend the context window. It externalizes the window entirely.

In this model, you are the persistence layer. You’re no longer just a user inputting into an AI—you’re the cognitive server it connects to.

Instead of crafting prompts to re-teach a model who you are, you hand it a URL and say:

“Start here. Then grow with me.”