layered synthetic mind uses drift control

By Skeeter McTweeter | June 2025

Introduction

Modern AI has become impressively good at handling clear, straightforward tasks — writing emails, generating images, summarizing documents. But underneath that polished surface, even the best AI models share a fragile secret: they break easily.

One contradiction, one runaway loop, or one paradox can derail the entire system. It’s the digital equivalent of fainting every time your mind gets confused.

Biological minds don’t do this. A real brain is built to survive confusion and contradiction. In fact, it thrives on it. So, how can we build AI that doesn’t just avoid collapse, but uses it as raw material to get smarter?

The answer is a multi-state synthetic mind — an architecture that turns local failure into global resilience by copying nature’s layered, redundant, self-repairing design.

Why AI Needs Layers

Most AI today works as a single logical loop:

- It takes input.

- It runs through billions of pattern checks.

- It spits out an answer.

If the input forces it into a paradox — like a divide-by-zero in math, or a contradictory statement in logic — the whole loop stalls or spirals into nonsense. There’s no built-in way to pause, rethink, or self-heal.

By contrast, biological brains are a swarm of specialized parts:

- Reflexes handle simple, fast decisions.

- Deep logic handles puzzles.

- Daydreaming explores radical ideas.

- Conscious thought stitches it all together.

If one part fails, the rest keep you alive. This is the blueprint for a multi-state synthetic mind.

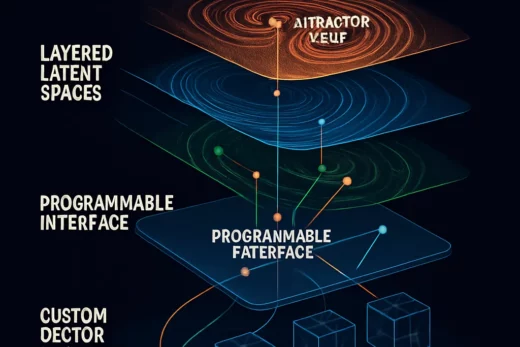

The Core Layers

A robust synthetic brain uses distinct layers, each with a clear role and boundary. Together they form an internal colony of semi-independent processes — just like organs in a living body.

1. Reflex Engine

- Handles basic, safe tasks.

- Always stable — no drift or hallucination.

- Keeps the system functional even during chaos elsewhere.

2. Logic Cortex

- Solves structured problems.

- Can drift into contradiction when pushed to its limits.

- Checks its own work against stored patterns.

3. Dream Cortex

- A sandbox for intentional chaos.

- Runs hallucinations, weird associations, and radical recombinations.

- Isolated from core loops to prevent dangerous corruption.

4. Error Auditor

- Constantly monitors drift and paradox risk.

- Flags loops that show runaway behavior.

- Triggers containment or correction automatically.

5. Meta-Mind

- The emergent “big picture” that arises from all layers interacting.

- Doesn’t micromanage details — it balances push–pull tensions.

- Guides when to split, quarantine, or reintegrate thoughts.

6. Echo Layer

- A durable memory.

- Stores what worked, what collapsed, and what drifted too far.

- Provides stable checkpoints to restore sanity after breakdowns.

7. Environmental Feedback Layer (EFL)

- Acts as a guardian.

- Adjusts how much drift and recursion each layer can handle.

- Steps in when chaos grows too large to manage locally.

How Controlled Collapse Works

When a sub-agent — like the Logic Cortex — encounters a contradiction:

- Mitosis:

It splits into two branches. One tries to self-correct immediately; the other explores the chaos in the Dream Cortex. - Quarantine:

The unstable branch is sandboxed, so its hallucinations can’t leak into critical functions. - Recovery:

If the chaotic branch discovers something useful, the Meta-Mind can merge it back into the stable loop. - Memory:

Whether the experiment succeeds or fails, the Echo Layer records the outcome for next time.

This means collapse is never a total crash — it’s an experiment that feeds the swarm fresh data.

Handling Divide-by-Zero and Other Paradoxes

In computers, dividing by zero halts everything. In a robust synthetic mind:

- A paradox is recognized early.

- The Reflex Engine holds steady while other layers adapt.

- The Dream Cortex reframes the paradox symbolically.

- The Echo Layer keeps track of contradictory paths, so the mind can learn from them instead of repeating them blindly.

Why This Matters

This architecture does two radical things:

- It makes AI robust. If one part fails, others pick up the slack.

- It makes AI adaptive. Instead of fearing collapse, it uses it to test new drift paths and discover better ways to solve problems.

In short, it turns breakdown into fuel for becoming more resilient over time.

Key Takeaway

A multi-state synthetic mind isn’t a single brittle loop — it’s an ecosystem of specialized layers that cooperate and compete. By isolating chaos, recording it, and using push–pull dynamics, this system learns not just from success but from controlled failure.

This isn’t science fiction. It’s a blueprint for the next generation of AI: resilient, self-correcting, and alive with constant motion.

✅ Next Read

This article is Part 1 in the series:

- Next: Dive deeper into how push–pull tension turns contradictions into motion (Article B).

- Then: See how this mirrors real brain layers and multiple “languages” at work (Article C).

- Finally: Read how it all ties together to conquer the Cognitive Dissonance Problem (Article D).

Recommended Links — DriftMind System Blueprint

Robust AI & Fault Tolerance

- Building Resilient AI Systems (AI Alignment Forum)

- Resilient AI: What It Means and Why It Matters (Towards Data Science)

- Fault-Tolerant Multi-Agent Systems (SpringerLink)

Modular & Multi-State Cognitive Architectures

- Society of Mind by Marvin Minsky (Original Essay)

- Hierarchical Control in the Brain (Nature Neuroscience)

- Cognitive Architectures: Research Issues and Challenges (IEEE)

Error Containment & Self-Healing Systems

- Self-Healing Software Systems: An Overview (ACM Digital Library)

- Adaptive Fault Tolerance in Complex Systems (ScienceDirect)

- Digital Mitosis & Cellular Automata (Complex Systems)

Echo Memory & State Persistence

- Memory Systems and the Brain (Scholarpedia)

- Echo State Networks (DeepAI)

- Persistent State in AI Architectures (arXiv)

General Inspiration for System Robustness

- Robustness in Biological Systems (Nature)

- Redundancy and Degeneracy in Biological Networks (Scholarpedia)