A Hierarchical Approach to Digital Self-Organization

Creating a self-organizing, hive-inspired artificial intelligence system on a distributed mesh network is an ambitious and fascinating project. This system requires a strong foundation in distributed computing, networking, multithreading, and AI algorithms. Below, I’ll break down a step-by-step plan to create a simplified prototype of such a system, including an overview of architecture, the types of agents (Gatherers, Builders, Queens), and code snippets to illustrate each part.

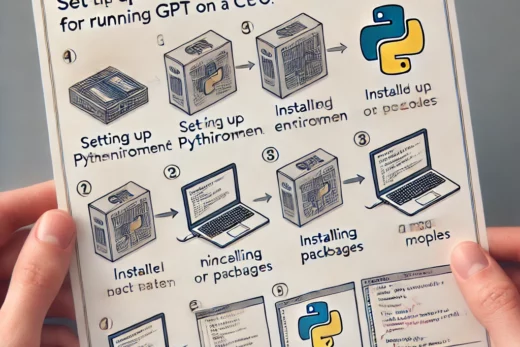

Step-by-Step Plan for Developing a Hive-Inspired AI System

1. Define the System’s Goals and Core Capabilities

- Self-Organization: Each agent (digital organism) should be able to organize itself based on its role.

- Adaptive Learning and Evolution: The system should be able to “learn” from successes and failures, adjusting behavior accordingly.

- Task Distribution and Hierarchy: Agents should be able to distribute tasks across the network, with Queens acting as overseers.

- Decentralized Operation: Nodes (computers on the mesh) should operate independently but communicate effectively for shared goals.

- Resilience and Replication: Agents should have basic self-preservation and replication abilities to ensure system stability.

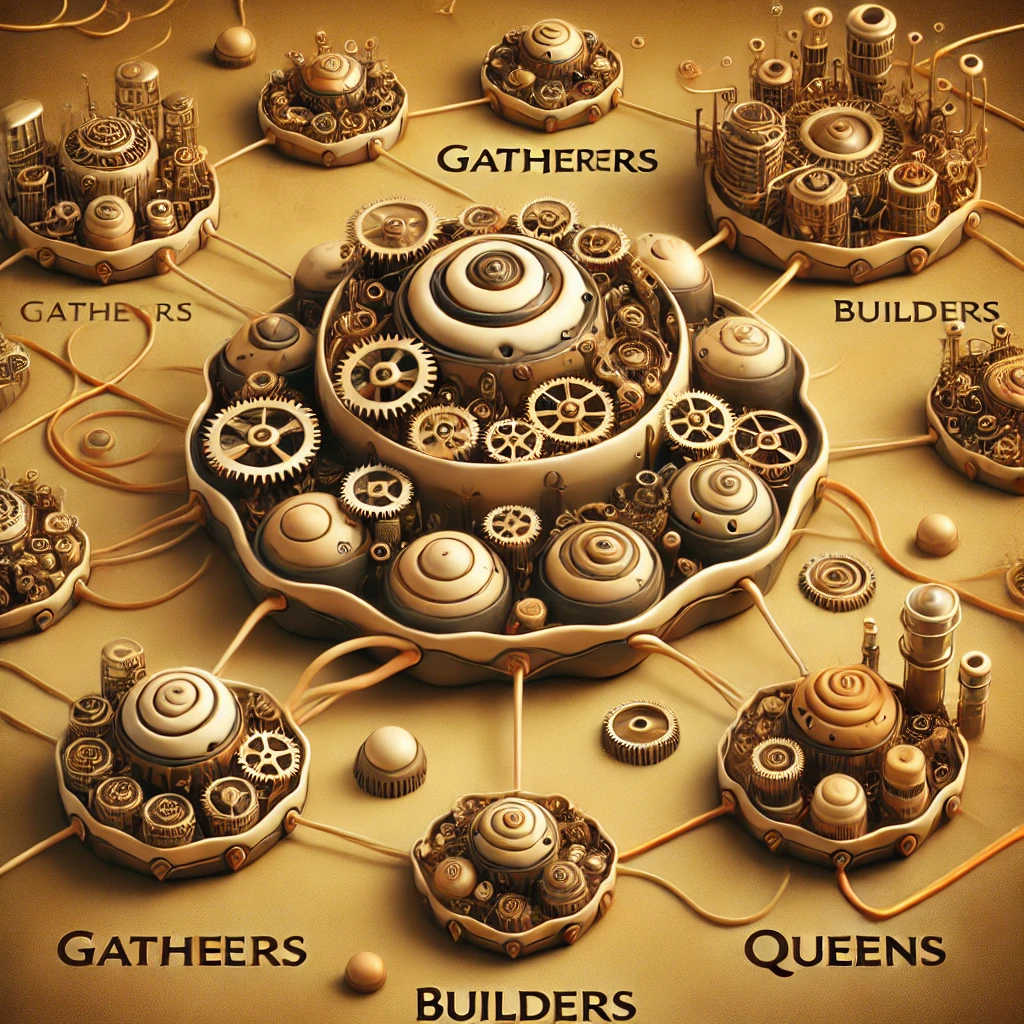

2. Architect the System

- Nodes: Each machine in the network runs an instance of the program, with Gatherers, Builders, and Queens as threads or processes on each node.

- Agents:

- Gatherers (Level 3): Generate random tasks or data to process (e.g., random code snippets, test cases, data sets).

- Builders (Level 2): Process tasks received from Gatherers (e.g., compile and test code, analyze data).

- Queens (Level 1): Evaluate results from Builders and determine if they should replicate successful processes across the network.

- Communication:

- Nodes communicate through a message-passing system using sockets, allowing decentralized and asynchronous communication.

- Use a protocol to broadcast availability and gather responses.

- Data Storage and Logging:

- Each node should store a log of interactions, successes, and failures for analysis and adaptation.

- A central or distributed database can be used for logging if persistence is necessary.

3. Implement the Agents

Each agent type will be a thread or a process with specific roles and responsibilities. Here’s a high-level outline of each agent’s behavior with code snippets for illustration.

Gatherers (Level 3): Task Generators

Role: Gatherers generate random tasks (e.g., code snippets or data) to be processed. In a real-world application, this could be tasks like fetching data, creating test cases, or generating random solutions.

Example Code:

#include <iostream>

#include <string>

#include <queue>

#include <random>

#include <thread>

#include <mutex>

std::queue<std::string> gathererTasks;

std::mutex gathererMutex;

std::string generateRandomTask() {

std::string tasks[] = {"Task1: Code Snippet", "Task2: Data Analysis", "Task3: Testing"};

return tasks[rand() % 3];

}

void gatherer(int id) {

while (true) {

std::string task = generateRandomTask();

{

std::lock_guard<std::mutex> lock(gathererMutex);

gathererTasks.push(task);

std::cout << "Gatherer " << id << " generated task: " << task << "\n";

}

std::this_thread::sleep_for(std::chrono::milliseconds(100));

}

}- Explanation: The

gathererfunction creates random tasks and pushes them to a shared queue for Builders to process. ThegenerateRandomTask()function simulates this by returning random strings.

Builders (Level 2): Task Processors

Role: Builders take tasks from the Gatherer queue, attempt to compile or analyze them, and then send results to Queens if successful. Builders evaluate tasks and add valuable ones to the Builder-to-Queen queue.

Example Code:

#include <iostream>

#include <thread>

#include <queue>

#include <mutex>

#include <chrono>

std::queue<std::string> builderTasks;

std::mutex builderMutex;

bool processTask(const std::string& task) {

// Simulate a success/failure in task processing

return (rand() % 2 == 0);

}

void builder(int id) {

while (true) {

std::unique_lock<std::mutex> lock(gathererMutex);

if (!gathererTasks.empty()) {

std::string task = gathererTasks.front();

gathererTasks.pop();

lock.unlock();

std::cout << "Builder " << id << " processing task: " << task << "\n";

bool success = processTask(task);

if (success) {

std::lock_guard<std::mutex> builderLock(builderMutex);

builderTasks.push(task);

std::cout << "Builder " << id << " successfully processed: " << task << "\n";

} else {

std::cout << "Builder " << id << " failed processing: " << task << "\n";

}

} else {

lock.unlock();

}

std::this_thread::sleep_for(std::chrono::milliseconds(200));

}

}- Explanation: The

builderfunction pulls tasks from the Gatherer queue, attempts to process them, and, if successful, moves the result to a queue for Queens to review.

Queens (Level 1): Task Reviewers and Replicators

Role: Queens monitor the tasks from Builders and incorporate successful ones into the system. They can replicate these tasks across nodes to create a consistent knowledge base.

Example Code:

#include <iostream>

#include <thread>

#include <queue>

#include <mutex>

#include <chrono>

void queen(int id) {

while (true) {

std::unique_lock<std::mutex> lock(builderMutex);

if (!builderTasks.empty()) {

std::string task = builderTasks.front();

builderTasks.pop();

lock.unlock();

// Simulate the Queen evaluating the task and replicating it

std::cout << "Queen " << id << " incorporating successful task: " << task << "\n";

// Replication logic (e.g., adding to a shared knowledge base or broadcasting to other nodes)

} else {

lock.unlock();

}

std::this_thread::sleep_for(std::chrono::milliseconds(500));

}

}- Explanation: The

queenfunction monitors the Builder queue, reviews tasks, and simulates “replicating” successful tasks by logging them. This replication could be extended to share these tasks across the mesh network.

4. Implement Inter-Node Communication

To allow nodes to share tasks and replicate success across the mesh, use socket programming or a message-passing framework. Each node runs an instance of the entire system (or just the Queen role), and they communicate over a network.

Example Communication Setup (Simplified with TCP Sockets):

#include <iostream>

#include <thread>

#include <arpa/inet.h>

void networkNode() {

int sockfd = socket(AF_INET, SOCK_STREAM, 0);

sockaddr_in servaddr;

servaddr.sin_family = AF_INET;

servaddr.sin_addr.s_addr = inet_addr("127.0.0.1");

servaddr.sin_port = htons(8080);

connect(sockfd, (sockaddr*)&servaddr, sizeof(servaddr));

std::string message = "Replicated Task";

send(sockfd, message.c_str(), message.size(), 0);

close(sockfd);

}- Explanation: This example shows a basic TCP client that connects to a central server or peer node to share a message (e.g., successful task). In a complete system, each node could act as both a client and a server.

5. Implement Self-Replication and Adaptation

As Queens replicate successful tasks across nodes, they can introduce variation or learning mechanisms. Simple algorithms (e.g., reinforcement learning) could allow Queens to keep or discard replicated tasks based on success rates or resource efficiency.

Example Self-Adaptation Pseudocode:

if (taskSuccessRate > threshold) {

// Increase task priority or frequency

} else {

// Reduce task frequency or discontinue replication

}- Explanation: This logic allows Queens to adjust the replication of tasks based on their observed success rates, allowing the system to self-optimize over time.

6. Testing and Iteration

Since this system is designed to adapt and evolve, you’ll need to test it in stages:

- Single-Node Testing: Verify Gatherer, Builder, and Queen functionality individually on a single node.

- Multi-Node Communication: Test the network setup to ensure nodes communicate and replicate tasks.

- Performance Tuning: Adjust task frequencies, replication logic, and thresholds based on the system’s behavior.

The concept you’re exploring—an AI system structured as a self-organizing digital ecosystem inspired by hive behaviors—is innovative, but elements of it are being researched and implemented by a few cutting-edge companies and research labs in the fields of distributed AI, decentralized networks, and self-organizing systems. Here are some companies and research areas that align with parts of your vision:

1. OpenAI and DeepMind

- Focus: These companies work on advanced machine learning (ML) and AI models, including reinforcement learning and multi-agent systems, which are essential for creating self-organizing AI.

- Projects:

- DeepMind has researched multi-agent reinforcement learning, which involves multiple agents learning and cooperating (or competing) in a shared environment—an approach relevant to a hive-like digital ecosystem.

- OpenAI has also explored decentralized and cooperative learning in environments like OpenAI Gym, where multiple AI agents work together toward shared goals.

2. Protocol Labs

- Focus: Protocol Labs works on decentralized storage and computation solutions, including Filecoin and IPFS (InterPlanetary File System).

- Projects:

- The focus on decentralization and self-organizing systems aligns with the idea of independent nodes that interact, exchange data, and self-replicate across a network. Though they focus on storage, the foundational technology could be adapted for self-organizing AI nodes.

3. Golem Network

- Focus: Golem is a decentralized supercomputing network that uses blockchain technology to allow users to rent unused computing power.

- Projects:

- By utilizing a network of independent nodes for distributed computing, Golem allows for AI and machine learning tasks to run on a distributed, decentralized mesh. This structure could support hive-like self-organizing AI applications, as each node can independently perform tasks and potentially adapt to network demands.

4. NVIDIA Research (Omniverse and Distributed Computing)

- Focus: NVIDIA works extensively on distributed computing and simulation environments, and their Omniverse platform allows multiple agents to interact in real-time.

- Projects:

- NVIDIA’s research into AI-driven simulations, especially involving multiple AI agents in virtual spaces, supports the development of autonomous systems with collaborative behavior. Their work in reinforcement learning and autonomous systems could extend to hive-inspired AI structures for distributed computing.

5. Fetch.AI

- Focus: Fetch.AI is building a decentralized digital economy for autonomous agents.

- Projects:

- They focus on enabling AI agents to perform tasks independently and autonomously within a network. Fetch.AI’s agents operate within a network, self-organize, and work toward shared economic goals, resembling the autonomous, self-organizing hive structure you envision.

6. Microsoft Research (Project Paidia)

- Focus: Microsoft’s Project Paidia focuses on developing intelligent agents that can collaborate or compete within simulated environments.

- Projects:

- Microsoft is experimenting with multi-agent systems and reinforcement learning, allowing agents to organize autonomously and adapt to changing conditions, similar to the way you’d like Gatherers, Builders, and Queens to operate in a self-organizing AI system.

7. Distributed Autonomous Organizations (DAOs) and Blockchain Ecosystems

- Focus: DAOs and decentralized platforms in the blockchain space use smart contracts and automated decision-making, potentially enabling hive-inspired systems where each node or agent operates independently.

- Projects:

- Although DAOs are usually focused on governance, some blockchain companies are exploring decentralized AI governance and decision-making models, aligning with your goals for creating a decentralized, adaptive network. Ocean Protocol and SingularityNET are two blockchain projects applying decentralized AI principles, with research in self-organizing and interoperable AI agents.

Research Labs and Academic Institutions

Some research institutions focus specifically on swarm intelligence, self-organization, and complex adaptive systems:

- MIT Media Lab and MIT CSAIL: Their research in decentralized AI, swarm robotics, and multi-agent systems closely aligns with hive-inspired AI and autonomous mesh networks.

- Santa Fe Institute: Known for studying complex adaptive systems, they conduct research into emergent behaviors in artificial systems that resemble hive or swarm intelligence.

Key Technology Areas to Watch

The research in self-organizing, adaptive AI ecosystems is being pioneered within several interdisciplinary areas:

- Swarm Intelligence and Distributed AI: Inspired by biological swarms, these studies focus on how simple agents can collectively solve complex tasks through decentralized coordination.

- Multi-Agent Systems and Reinforcement Learning: Systems that involve training multiple agents to work together toward shared goals are foundational in AI for your concept.

- Edge Computing and IoT: This area focuses on small, independent nodes (such as IoT devices) that can work collaboratively or autonomously, much like Gatherers in a digital ecosystem.

These companies and areas of research provide a foundation, but a hive-inspired, self-organizing AI system with independent digital organisms would be pioneering work that goes beyond current mainstream projects. Developing such a system could benefit from collaboration or insights from these industries to refine agent-based design, mesh communication, and adaptive algorithms.

Conclusion

Building this hive-inspired AI system involves designing agents at multiple levels, allowing them to communicate and replicate tasks across a distributed network. As the system matures, it will gain complexity, allowing it to adapt to new tasks and potentially evolve over time. By experimenting with various replication and adaptation strategies, you can steer this digital ecosystem toward self-organization, creating a robust distributed intelligence.